Evolution – Evidences for the Theory of Evolution

Introduction Evolution is a fundamental concept in biology that explains how life has changed and diversified over millions of years. The theory of evolution, primarily

Latest Articles

Evolution – Evidences for the Theory of Evolution

Introduction Evolution is a fundamental concept in biology that explains how life has changed and diversified over millions of years. The theory of evolution, primarily

Evolution – Neo-Darwinism & Errors of Darwinism

Introduction Evolution is the process through which organisms undergo gradual changes over generations, leading to the diversity of life on Earth. Charles Darwin’s theory of

Theory of Darwin or Darwinism

Class 10 Biology – West Bengal Board Introduction Darwin’s theory of evolution, also known as Darwinism, was proposed by Charles Darwin in his book On

Biology concepts:

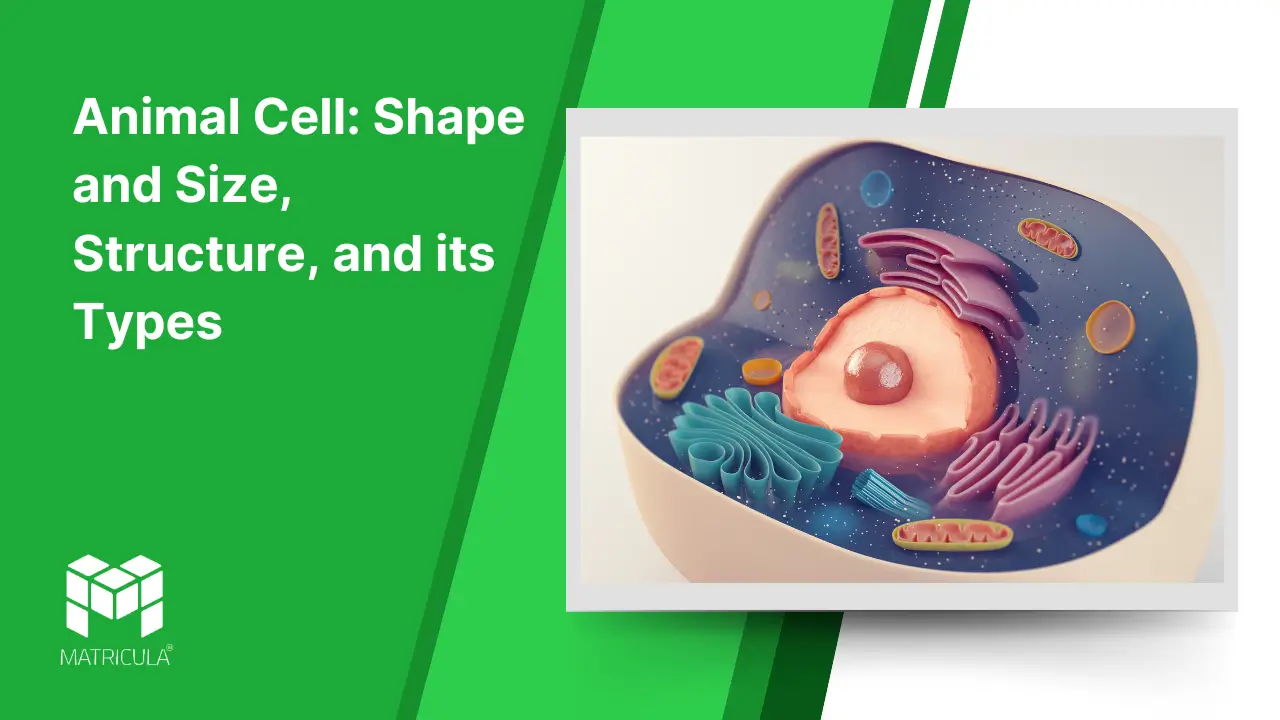

The animal cell is one of the fundamental units of life, playing a pivotal role in the biological processes of animals. It is a eukaryotic cell, meaning it possesses a well-defined nucleus enclosed within a membrane, along with various specialized organelles. Let’s explore its shape and size, structural components, and types in detail.

Shape and Size of Animal Cells

Animal cells exhibit a variety of shapes and sizes, tailored to their specific functions. Unlike plant cells, which are typically rectangular due to their rigid cell walls, animal cells are more flexible and can be spherical, oval, flat, elongated, or irregularly shaped. This flexibility is due to the absence of a rigid cell wall, allowing them to adapt to different environments and functions.

- Size: Animal cells are generally microscopic, with sizes ranging from 10 to 30 micrometers in diameter. Some specialized cells, like nerve cells (neurons), can extend over a meter in length in larger organisms.

- Shape: The shape of an animal cell often reflects its function. For example, red blood cells are biconcave to optimize oxygen transport, while neurons have long extensions to transmit signals efficiently.

Structure of Animal Cells

Animal cells are composed of several key components, each performing specific functions essential for the cell’s survival and activity. Below are the major structural elements:

Cell Membrane (Plasma Membrane):

- A semi-permeable membrane is made up of a lipid bilayer with embedded proteins.

- Regulates the entry and exit of substances, maintaining homeostasis.

Cytoplasm:

- A jelly-like substance that fills the cell, provides a medium for biochemical reactions.

- Houses the organelles and cytoskeleton.

Nucleus:

- The control center of the cell, containing genetic material (DNA) organized into chromosomes.

- Surrounded by the nuclear envelope, it regulates gene expression and cell division.

Mitochondria:

- Known as the powerhouse of the cell, mitochondria generate energy in the form of ATP through cellular respiration.

Endoplasmic Reticulum (ER):

- Rough ER: Studded with ribosomes, it synthesizes proteins.

- Smooth ER: Involved in lipid synthesis and detoxification processes.

Golgi Apparatus:

- Modifies, sorts, and packages proteins and lipids for transport.

Lysosomes:

- Contain digestive enzymes to break down waste materials and cellular debris.

Cytoskeleton:

- A network of protein fibers providing structural support and facilitating intracellular transport and cell division.

Centrioles:

- Cylindrical structures are involved in cell division, forming the spindle fibers during mitosis.

Ribosomes:

- Sites of protein synthesis, either free-floating in the cytoplasm or attached to the rough ER.

Vesicles and Vacuoles:

- Vesicles transport materials within the cell, while vacuoles store substances, though they are smaller and less prominent compared to those in plant cells.

Types of Animal Cells

Animal cells are specialized to perform various functions, leading to the existence of different types. Below are some primary examples:

Epithelial Cells:

- Form the lining of surfaces and cavities in the body, offering protection and enabling absorption and secretion.

Muscle Cells:

- Specialized for contraction, and facilitating movement. They are categorized into skeletal, cardiac, and smooth muscle cells.

Nerve Cells (Neurons):

- Electrical signals are transmitted throughout the body, enabling communication between different parts.

Red Blood Cells (Erythrocytes):

- Transport oxygen and carbon dioxide using hemoglobin.

White Blood Cells (Leukocytes):

- Play a critical role in immune response by defending the body against infections.

Reproductive Cells (Gametes):

- Sperm cells in males and egg cells in females are involved in reproduction.

Connective Tissue Cells:

Include fibroblasts, adipocytes, and chondrocytes, contributing to structural support and storage functions.

Biodiversity encompasses the variety of life on Earth, including all organisms, species, and ecosystems. It plays a crucial role in maintaining the balance and health of our planet’s ecosystems. Among the different levels of biodiversity, species diversity—the variety of species within a habitat—is particularly vital to ecosystem functionality and resilience. However, human activities and environmental changes have significantly impacted biodiversity, leading to its decline. This article explores the importance of species diversity, the causes of biodiversity loss, and its effects on ecosystems and human well-being.

Importance of Species Diversity to the Ecosystem

Species diversity is a cornerstone of ecosystem health. Each species has a unique role, contributing to ecological balance and providing critical services such as:

Ecosystem Stability: Diverse ecosystems are more resilient to environmental changes and disturbances, such as climate change or natural disasters. A variety of species ensures that ecosystems can adapt and recover efficiently.

Nutrient Cycling and Productivity: Different species contribute to nutrient cycling, soil fertility, and overall productivity. For instance, plants, fungi, and decomposers recycle essential nutrients back into the soil.

Pollination and Seed Dispersal: Pollinators like bees and birds facilitate plant reproduction, while seed dispersers ensure the spread and growth of vegetation.

Climate Regulation: Forests, wetlands, and oceans—supported by diverse species—act as carbon sinks, regulating the Earth’s temperature and mitigating climate change.

Human Benefits: Biodiversity provides resources such as food, medicine, and raw materials. Cultural, recreational, and aesthetic values also stem from species diversity.

Causes of Loss of Biodiversity

Several factors, most of which are anthropogenic, contribute to biodiversity loss:

Habitat Destruction: Urbanization, deforestation, and agriculture often result in habitat fragmentation or complete destruction, leading to the displacement and extinction of species.

Climate Change: Altered temperature and precipitation patterns disrupt ecosystems, forcing species to adapt, migrate, or face extinction.

Pollution: Contamination of air, water, and soil with chemicals, plastics, and waste harms wildlife and degrades habitats.

Overexploitation: Unsustainable hunting, fishing, and logging deplete species populations faster than they can recover.

Invasive Species: Non-native species introduced intentionally or accidentally often outcompete native species, leading to ecological imbalances.

Diseases: Pathogens and pests can spread rapidly in altered or stressed ecosystems, further threatening species.

Effects of Loss of Biodiversity

The decline in biodiversity has profound and far-reaching consequences:

Ecosystem Collapse: Loss of keystone species—those crucial to ecosystem functioning—can trigger the collapse of entire ecosystems.

Reduced Ecosystem Services: Biodiversity loss undermines services like pollination, water purification, and climate regulation, directly affecting human livelihoods.

Economic Impacts: Declines in biodiversity affect industries such as agriculture, fisheries, and tourism, resulting in economic losses.

Food Security Risks: The reduction in plant and animal diversity threatens food supply chains and agricultural resilience.

Health Implications: Loss of species reduces the potential for medical discoveries and increases vulnerability to zoonotic diseases as ecosystems degrade.

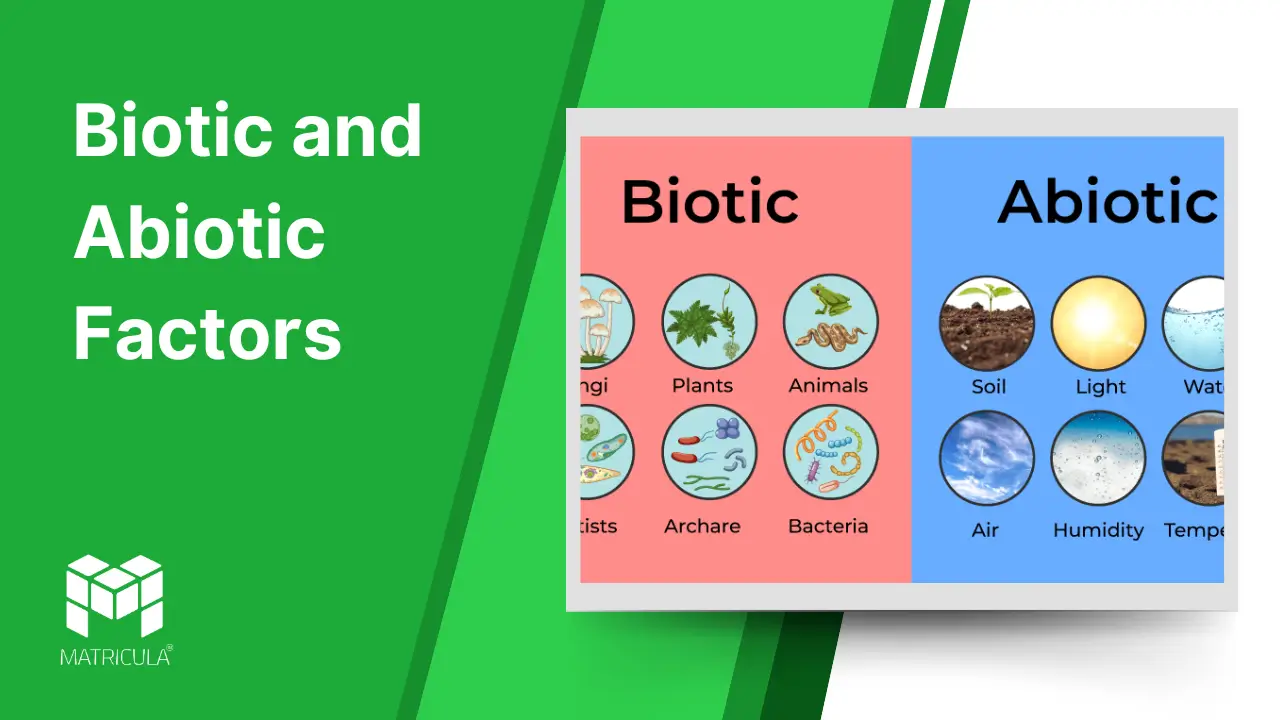

Ecosystems are dynamic systems formed by the interaction of living and non-living components. These components can be categorized into biotic and abiotic factors. Together, they shape the structure, functionality, and sustainability of ecosystems. Understanding these factors is crucial to studying ecology, environmental science, and the intricate relationships within nature.

Biotic Factors

Biotic factors are the living components of an ecosystem. These include organisms such as plants, animals, fungi, bacteria, and all other life forms that contribute to the biological aspect of the environment.

Categories of Biotic Factors:

Producers (Autotrophs): Organisms like plants and algae that synthesize their own food through photosynthesis or chemosynthesis.

Consumers (Heterotrophs): Animals and other organisms that rely on consuming other organisms for energy. They can be herbivores, carnivores, omnivores, or decomposers.

Decomposers and Detritivores: Fungi and bacteria that break down dead organic matter, recycling nutrients back into the ecosystem.

Role of Biotic Factors:

Energy Flow: Producers, consumers, and decomposers drive energy transfer within an ecosystem.

Interdependence: Interactions like predation, competition, mutualism, and parasitism maintain ecological balance.

Population Regulation: Species interactions regulate populations, preventing overpopulation and resource depletion.

Examples of Biotic Interactions:

- Pollinators like bees and butterflies aid in plant reproduction.

- Predator-prey relationships, such as lions hunting zebras.

- Symbiotic relationships, such as fungi and algae forming lichens.

Abiotic Factors

Abiotic factors are the non-living physical and chemical components of an ecosystem. They provide the foundation upon which living organisms thrive and evolve.

Key Abiotic Factors:

Climate: Temperature, humidity, and precipitation influence species distribution and survival.

Soil: Nutrient composition, pH levels, and texture affect plant growth and the organisms dependent on plants.

Water: Availability, quality, and salinity determine the survival of aquatic and terrestrial life.

Sunlight: Essential for photosynthesis and influencing the behavior and physiology of organisms.

Air: Oxygen, carbon dioxide, and other gases are critical for respiration and photosynthesis.

Impact of Abiotic Factors:

Habitat Creation: Abiotic conditions define the types of habitats, such as deserts, forests, and aquatic zones.

Species Adaptation: Organisms evolve traits to adapt to specific abiotic conditions, like camels surviving in arid climates.

Ecosystem Dynamics: Abiotic changes, such as droughts or temperature shifts, can significantly alter ecosystems.

Examples of Abiotic Influence:

- The role of sunlight and CO2 in photosynthesis.

- River currents shaping aquatic habitats.

- Seasonal temperature changes triggering animal migration.

Interactions Between Biotic and Abiotic Factors

Biotic and abiotic factors are interconnected, influencing each other to maintain ecosystem equilibrium. For example:

- Plants (biotic) rely on soil nutrients, water, and sunlight (abiotic) to grow.

- Animals (biotic) depend on water bodies (abiotic) for hydration and food sources.

- Abiotic disturbances like hurricanes can affect biotic populations by altering habitats.

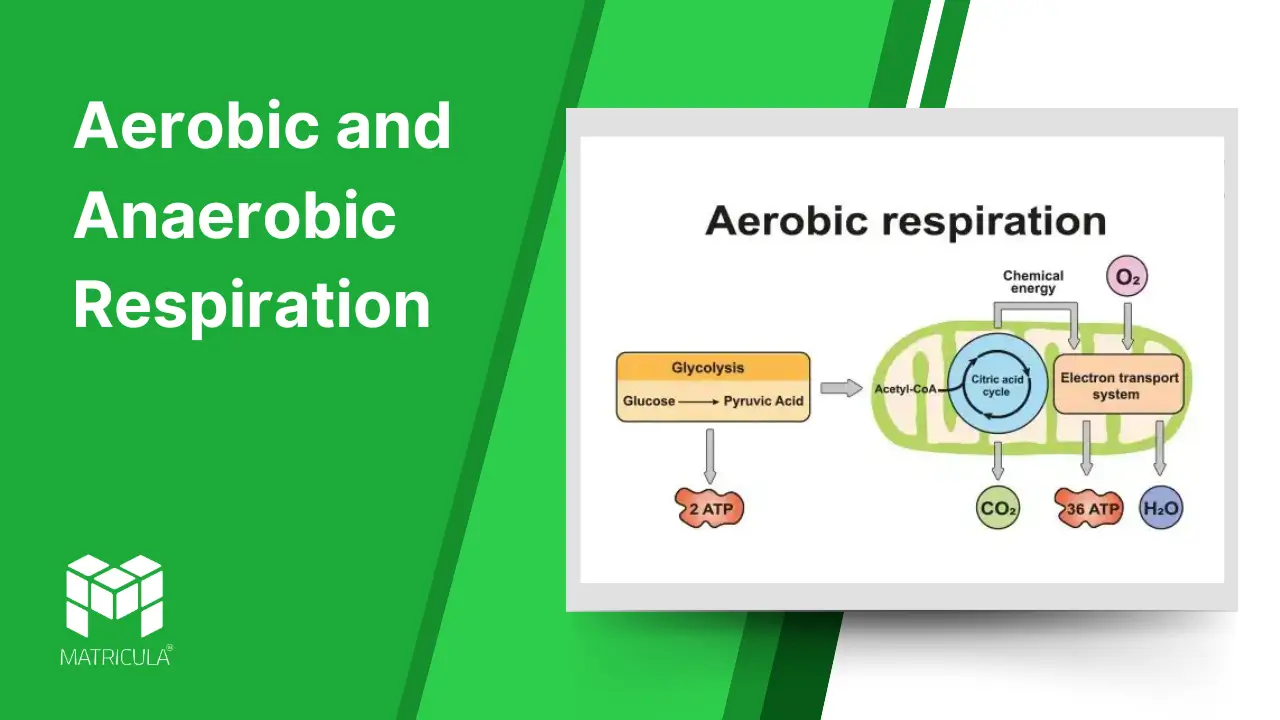

Respiration is a fundamental biological process through which living organisms generate energy to power cellular functions. It occurs in two main forms: aerobic and anaerobic respiration. While both processes aim to produce energy in the form of adenosine triphosphate (ATP), they differ significantly in their mechanisms, requirements, and byproducts. This article delves into the definitions, processes, and differences between aerobic and anaerobic respiration.

Aerobic Respiration

Aerobic respiration is the process of breaking down glucose in the presence of oxygen to produce energy. It is the most efficient form of respiration, generating a high yield of ATP.

Process:

- Glycolysis: The breakdown of glucose into pyruvate occurs in the cytoplasm, yielding 2 ATP and 2 NADH molecules.

- Krebs Cycle (Citric Acid Cycle): Pyruvate enters the mitochondria, where it is further oxidized, producing CO2, ATP, NADH, and FADH2.

- Electron Transport Chain (ETC): NADH and FADH2 donate electrons to the ETC in the mitochondrial membrane, driving the production of ATP through oxidative phosphorylation. Oxygen acts as the final electron acceptor, forming water.

Equation:

• Glucose (C6H12O6) + Oxygen (6O2) → Carbon dioxide (6CO2) + Water (6H2O) + Energy (36-38 ATP)

Byproducts: Carbon dioxide and water.

Efficiency: Produces 36-38 ATP molecules per glucose molecule.

Anaerobic Respiration

Anaerobic respiration occurs in the absence of oxygen, relying on alternative pathways to generate energy. While less efficient than aerobic respiration, it is vital for certain organisms and under specific conditions in multicellular organisms.

Process:

- Glycolysis: Similar to aerobic respiration, glucose is broken down into pyruvate in the cytoplasm, yielding 2 ATP and 2 NADH molecules.

- Fermentation: Pyruvate undergoes further processing to regenerate NAD+, enabling glycolysis to continue. The pathway varies depending on the organism:

- Lactic Acid Fermentation: Pyruvate is converted into lactic acid (e.g., in muscle cells during intense exercise).

- Alcoholic Fermentation: Pyruvate is converted into ethanol and CO2 (e.g., in yeast cells).

Equation (Lactic Acid Fermentation):

• Glucose (C6H12O6) → Lactic acid (2C3H6O3) + Energy (2 ATP)

Byproducts: Lactic acid or ethanol and CO2, depending on the pathway.

Efficiency: Produces only 2 ATP molecules per glucose molecule.

Key Differences Between Aerobic and Anaerobic Respiration

| Aspect | Aerobic Respiration | Anaerobic Respiration |

| Oxygen Requirement | Requires oxygen | Occurs in absence of oxygen |

| ATP Yield | 36-38 ATP per glucose molecule | 2 ATP per glucose molecule |

| Location | Cytoplasm and mitochondria | Cytoplasm only |

| Byproducts | Carbon dioxide and water | Lactic acid or ethanol and CO2 |

| Efficiency | High | Low |

Applications and Importance

Aerobic Respiration:

- Essential for sustained energy production in most plants, animals, and other aerobic organisms.

- Supports high-energy-demand activities, such as physical exercise and metabolic processes.

Anaerobic Respiration:

Enables survival during oxygen deficits, as seen in muscle cells during vigorous activity.

Crucial in environments lacking oxygen, such as deep soil layers or aquatic sediments.

Used in industries for fermentation processes, producing bread, beer, and yogurt.

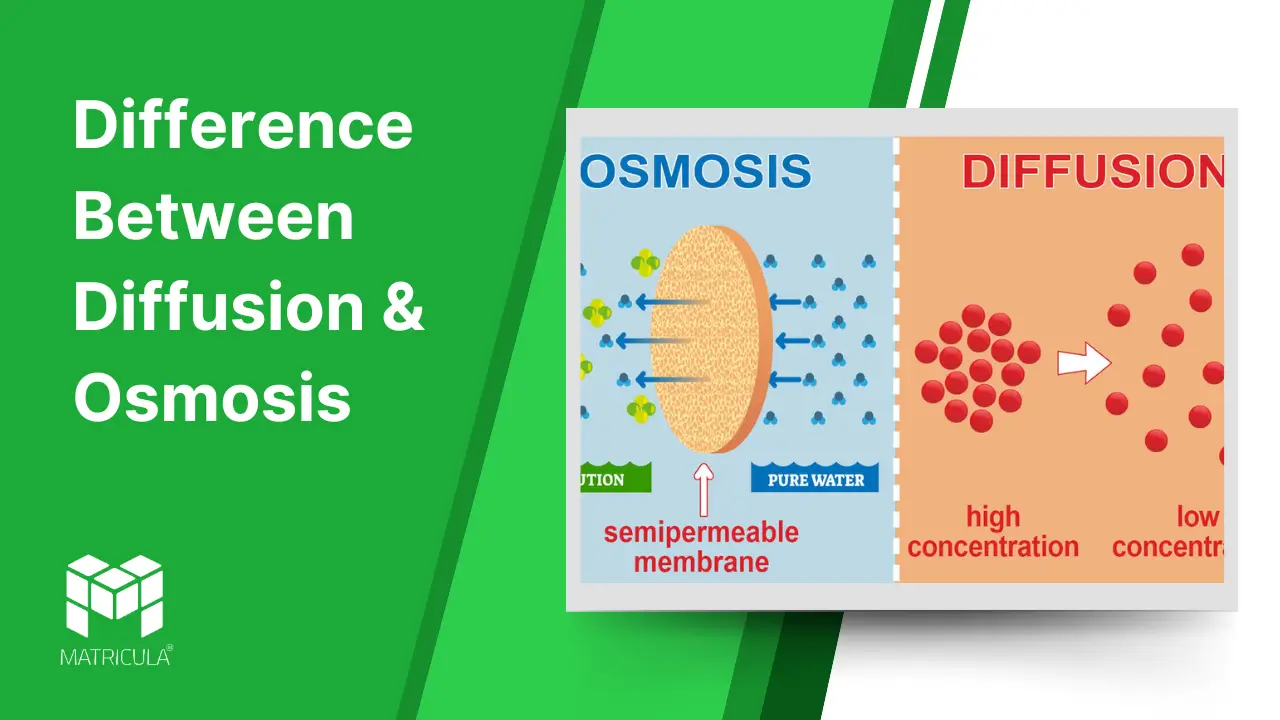

Diffusion and osmosis are fundamental processes that facilitate the movement of molecules in biological systems. While both involve the movement of substances, they differ in their mechanisms, requirements, and specific roles within living organisms. Understanding these differences is crucial for comprehending various biological and chemical phenomena.

What is Diffusion?

Diffusion is the process by which molecules move from an area of higher concentration to an area of lower concentration until equilibrium is reached. This process occurs due to the random motion of particles and does not require energy input.

Key Characteristics:

Can occur in gases, liquids, or solids.

- Does not require a semipermeable membrane.

- Driven by the concentration gradient.

Examples:

- The diffusion of oxygen and carbon dioxide across cell membranes during respiration.

- The dispersion of perfume molecules in the air.

Importance in Biology:

- Enables the exchange of gases in the lungs and tissues.

- Facilitates the distribution of nutrients and removal of waste products in cells.

What is Osmosis?

Osmosis is the movement of water molecules through a semipermeable membrane from an area of lower solute concentration to an area of higher solute concentration. It aims to balance solute concentrations on both sides of the membrane.

Key Characteristics:

- Specific to water molecules.

- Requires a semipermeable membrane.

- Driven by differences in solute concentration.

Examples:

- Absorption of water by plant roots from the soil.

- Water movement into red blood cells placed in a hypotonic solution.

Importance in Biology:

- Maintains cell turgor pressure in plants.

- Regulates fluid balance in animal cells.

Key Differences Between Diffusion and Osmosis

| Aspect | Diffusion | Osmosis |

| Definition | Movement of molecules from high to low concentration. | Movement of water across a semipermeable membrane from low to high solute concentration. |

| Medium | Occurs in gases, liquids, and solids. | Occurs only in liquids. |

| Membrane Requirement | Does not require a membrane. | Requires a semipermeable membrane. |

| Molecules Involved | Involves all types of molecules. | Specific to water molecules. |

| Driving Force | Concentration gradient. | Solute concentration difference. |

| Examples | Exchange of gases in the lungs. | Absorption of water by plant roots. |

Similarities Between Diffusion and Osmosis

Despite their differences, diffusion and osmosis share several similarities:

- Both are passive processes, requiring no energy input.

- Both aim to achieve equilibrium in concentration.

- Both involve the movement of molecules driven by natural gradients.

Applications and Significance

- In Plants:

- Osmosis helps plants absorb water and maintain structural integrity through turgor pressure.

- Diffusion facilitates gas exchange during photosynthesis and respiration.

- In Animals:

- Osmosis regulates hydration levels and prevents cell bursting or shrinking.

- Diffusion ensures efficient oxygen delivery and carbon dioxide removal in tissues.

- In Everyday Life:

- Water purification systems often use osmotic principles.

- Diffusion explains the spread of substances like pollutants in the environment.

Chemistry concepts:

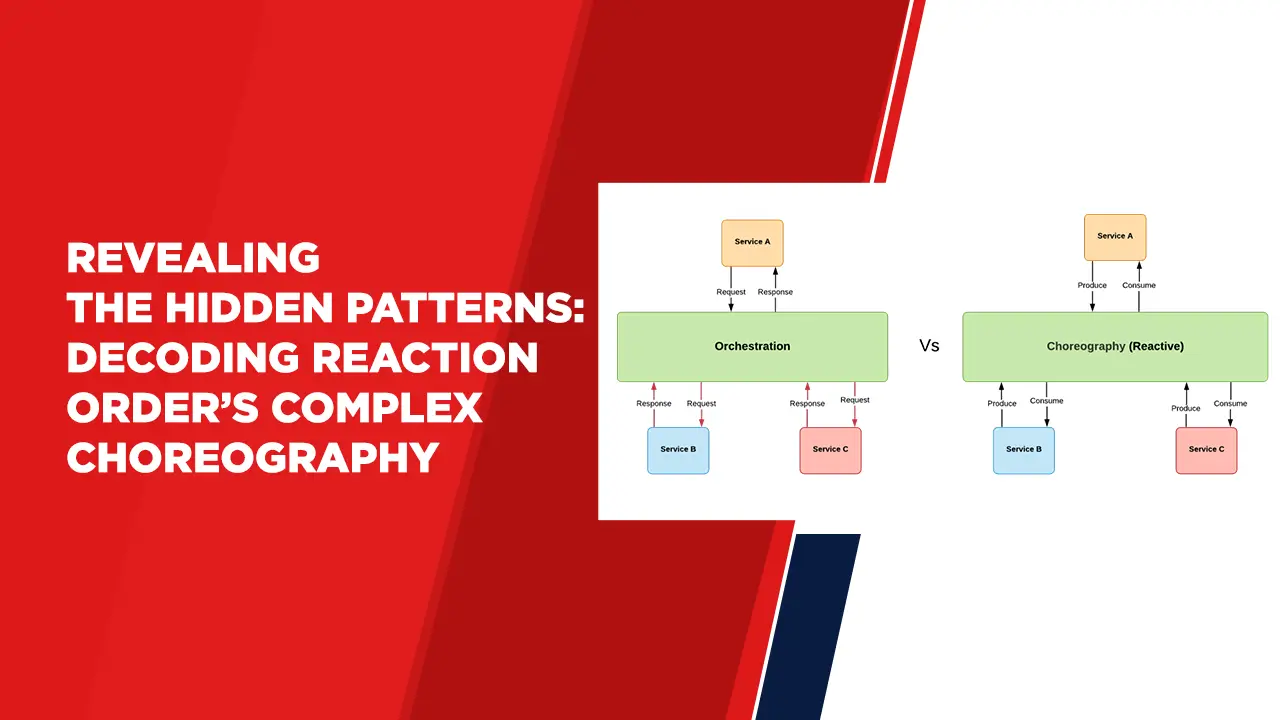

Revealing the Hidden Patterns: Decoding Reaction Order’s Complex Choreography

Introduction:

In the world of chemical kinetics, understanding the rates at which reactions occur is vital. The concept of order of reaction provides a framework for comprehending the relationship between reactant concentration and reaction rate. This article delves into the intricacies of order of reaction, shedding light on its significance, differences from molecularity, and the intriguing half-lives of zero and first order reactions.

Defining Order of Reaction:

Order of reaction refers to the exponent of the concentration of reactants in the rate equation that determines the rate of a chemical reaction. It reveals how changes in reactant concentrations influence the rate of reaction. The order of a reaction can be fractional, whole, zero, or even negative, indicating the sensitivity of reaction rate to reactant concentrations.

Difference Between Molecularity and Order of Reaction:

While molecularity and order of reaction are both terms used in chemical kinetics, they represent distinct aspects of a reaction:

Molecularity:

Molecularity is the number of reactant particles that participate in an elementary reaction step. It describes the collision and interaction between molecules, with possible values of unimolecular, bimolecular, or termolecular.

Order of Reaction:

Order of reaction, on the other hand, is a concept applied to the entire reaction, representing the relationship between reactant concentrations and reaction rate as determined by experimental data.

Half-Life of First Order Reaction:

The half life of a reaction refers to the amount of time it takes for half of the concentration of a reactant to be used up. In reactions that follow a first order pattern the half life remains constant. Is not affected by the starting concentration. This characteristic makes first order reactions, in fields including research on radioactive decay and investigations, into drug metabolism

Half-Life of Zero Order Reaction:

In contrast to first order reactions, the half-life of a zero order reaction is directly proportional to the initial concentration of the reactant. This means that as the concentration decreases, the half-life also decreases. Zero order reactions are intriguing due to their ability to maintain a relatively constant rate regardless of reactant concentration fluctuations.

Conclusion:

Order of reaction is a pivotal concept that unlocks the intricate relationships between reactant concentrations and reaction rates. It provides invaluable insights into reaction kinetics and is essential for understanding and controlling chemical processes. By deciphering the order of reaction, scientists and researchers can fine-tune reaction conditions, optimise industrial processes, and gain a deeper understanding of the underlying mechanisms that drive chemical transformations. Through this understanding, the world of chemistry becomes more predictable and manipulable, leading to innovation and progress in various scientific and industrial fields.

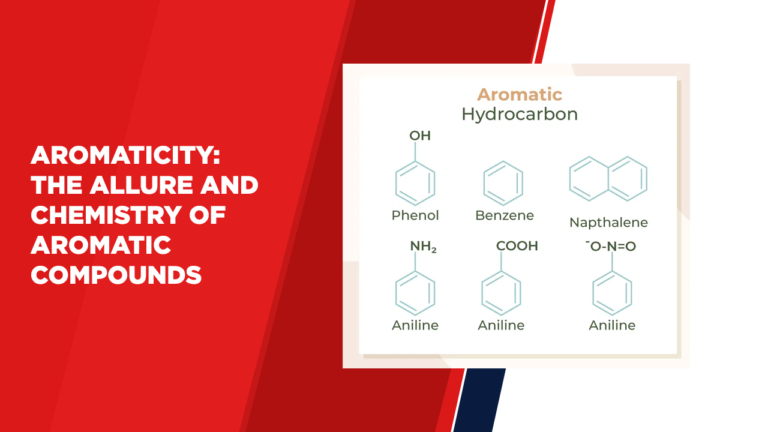

Aromaticity: The Allure and Chemistry of Aromatic Compounds

Introduction:

Aromatic compounds hold a special place in the realm of organic chemistry, captivating scientists with their unique properties and intriguing structures. Despite the term “aromatic” often being associated with fragrances, aromaticity in chemistry refers to a distinctive set of conjugated ring systems that exhibit remarkable stability and reactivity. This article delves into the world of aromatic compounds, elucidating their meaning, discussing aromatic amino acids, hydrocarbons, and their significance in modern chemistry.

Understanding Aromatic Compounds:

Aromatic compounds are characterised by a specific pattern of alternating single and double bonds within a closed-ring structure known as an aromatic ring. This arrangement of electrons results in enhanced stability, making these compounds less prone to reactions that would break their aromaticity.

Aromatic Meaning in Chemistry:

The term “aromatic” stems from the early observation that some of these compounds have pleasant aromas. However, the modern definition of aromaticity is rooted in the electronic structure of the compounds, specifically their resonance structures that distribute electron density evenly across the ring.

Aromatic Amino Acids:

In biochemistry, aromatic amino acids play a crucial role in protein structure and function. Phenylalanine, tyrosine, and tryptophan are three aromatic amino acids, each contributing distinct properties to proteins, including absorption of ultraviolet light and involvement in protein-ligand interactions.

Aromatic Hydrocarbons:

Aromatic hydrocarbons are a subset of aromatic compounds that consist solely of carbon and hydrogen atoms. Benzene, the simplest aromatic hydrocarbon, is often considered the archetype of aromaticity due to its highly stable ring structure. Aromatic hydrocarbons find applications in various industries, including pharmaceuticals, polymers, and fuels.

Significance in Modern Chemistry:

Aromatic compounds are at the heart of various chemical processes and industries:

Medicinal Chemistry:

Many pharmaceuticals incorporate aromatic rings into their structures to influence their biological activity.

Material Science:

Aromatic compounds are essential components of polymers, contributing to their stability, strength, and rigidity.

Organic Synthesis:

Aromatic compounds serve as versatile building blocks for creating complex organic molecules.

Conclusion:

Aromatic compounds have stood the test of time, intriguing chemists and shaping the course of chemical research and industry. From their fascinating resonance structures to their role in biochemistry and practical applications in various fields, aromatic compounds continue to be a subject of study and innovation. As our understanding of their properties deepens, these compounds continue to inspire discoveries that pave the way for advancements in chemistry, materials science, and medicine.

Unraveling the Secrets of Quantum Reality: The Azimuthal Quantum Number Demystified

Introduction

In the arcane realm of quantum mechanics lies an elusive Azimuthal Quantum Number that plays an essential role in shaping the behavior of subatomic particles. To shed some light on this hidden reality, this article will investigate its significance, sources, origins and methods of determination – hopefully opening new avenues into quantum reality!

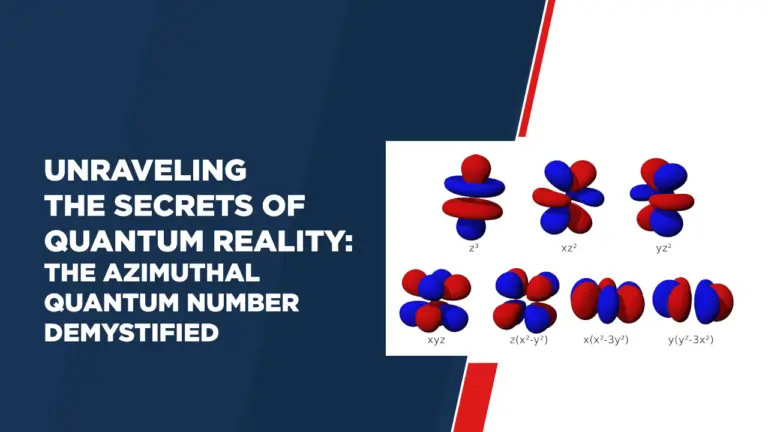

Understanding Azimuthal Quantum Number

The Azimuthal Quantum Number, abbreviated “l,” is an integral component of quantum mechanics that measures an electron’s angular momentum within an atom. As part of the quantum numbers system used to describe energy levels and spatial distribution of electrons within an atom, azimuthal quantum number categorizes electron orbits into different subshells with distinct energy levels and shapes.

The Formula and Importance

The formulas for Azimuthal Quantum Number are integral to understanding their essence. This number serves to define the size of an electron’s orbit, from zero to n-1, where “n” represents the principal quantum number of its energy level. Furthermore, its relationship with orbital shape can be complex: for example when L = 0, this would mean an s-orbital while L = 1 would correspond to a p-orbital, etc.

Origin of Azimuthal Quantum Number

Niels Bohr introduced the Azimuthal Quantum Number as part of a framework in his groundbreaking model of the atom in the early 20th century, which also included the principal quantum number, the magnetic quantum number and the spin quantum number.

Interpreting the function of the Azimuthal Quantum Number

To better understand the role of azimuthal quantum numbers, think of them like planets orbiting our Sun: just as planets have different orbits with different sizes and shapes, so do electrons in their azimuthal orbits. There are different orbitals determined by quantum numbers that determine whether or not an electron will appear near a particular region around their nucleus, thus affecting the chemical properties and behavior of the elements.

Determination of the Azimuthal Quantum Number

Calculating the Azimuthal Quantum Number requires some insight. One method involves monitoring the angular momentum of an electron and comparing it with values determined by quantum number limits. Furthermore, understanding atomic structures helps calculate this number by identifying the subshells where its electron resides.

Conclusion

As we explore the quantum world, Azimuthal Quantum Numbers become an invaluable piece of the puzzle, providing insight into electron behavior, energy levels and atomic structure. From its formula and historical significance to the shaping of orbits – this quantum number serves as an enduring testament to humanity’s quest to understand fundamental aspects of existence.

Unveiling the Silent Crisis: Delving Into the World of Deforestation

Introduction:

Deforestation, a global environmental concern, involves the widespread clearance of forests for various purposes. While human progress and economic development are important, the consequences of deforestation are far-reaching, affecting ecosystems, biodiversity, and even the climate. This article delves into the intricacies of deforestation, its causes, effects, and the significant connection between forest cover and rainfall patterns.

Understanding Deforestation:

Deforestation is the clearing of forests, which leads to the conversion of areas, into non forested land. This happens due to reasons, such, as agriculture, urbanisation, logging, mining and infrastructure development.

Causes of Deforestation:

Several factors contribute to deforestation:

Agricultural Expansion:

Clearing forests to make way for agriculture, including livestock grazing and crop cultivation, is a significant driver of deforestation.

Logging and Wood Extraction:

The demand for timber and wood products leads to unsustainable logging practices that degrade forests.

Urbanization:

Rapid urban growth requires land for housing and infrastructure, often leading to deforestation.

Mining:

Extractive industries such as mining can lead to the destruction of forests to access valuable minerals and resources.

Effects of Deforestation:

The consequences of deforestation are manifold:

Loss of Biodiversity:

Deforestation disrupts ecosystems, leading to the loss of diverse plant and animal species that depend on forest habitats.

Climate Change:

Trees absorb carbon dioxide, a greenhouse gas. Deforestation increases atmospheric carbon levels, contributing to global warming.

Soil Erosion:

Tree roots stabilize soil. Without them, soil erosion occurs, affecting agricultural productivity and water quality.

Disruption of Water Cycles:

Trees play a role in regulating the water cycle. Deforestation can lead to altered rainfall patterns and reduced water availability.

Consequences on Rainfall Patterns:

Deforestation can disrupt local and regional rainfall patterns through a process known as “biotic pump theory.” Trees release moisture into the atmosphere through a process called transpiration. This moisture, once airborne, contributes to cloud formation and rainfall. When forests are cleared, this natural moisture circulation is disrupted, potentially leading to reduced rainfall.

Conclusion:

Deforestation is not merely the loss of trees; it’s the loss of ecosystems, biodiversity, and the delicate balance that sustains our planet. The impact of deforestation ripples through ecosystems, affecting everything from climate stability to local water resources. Recognizing the causes, effects, and consequences of deforestation is vital for addressing this global issue and fostering a sustainable coexistence between humanity and nature. As awareness grows, the world must work collaboratively to find innovative solutions that balance development with the preservation of our invaluable forests.

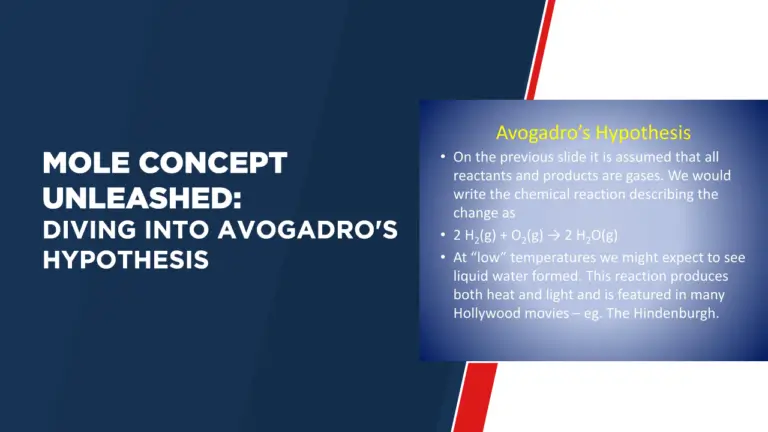

Unveiling Avogadro’s Number: The Secret Behind Counting Atoms

Introduction

Chemistry offers us a bridge between our everyday experience of the macroscopic world we observe and the realm of atoms and molecules – Avogadro’s number being one such fundamental constant that bridges this gap. Through this article we hope to unravel its secrets as well as appreciate its significance when counting atoms.

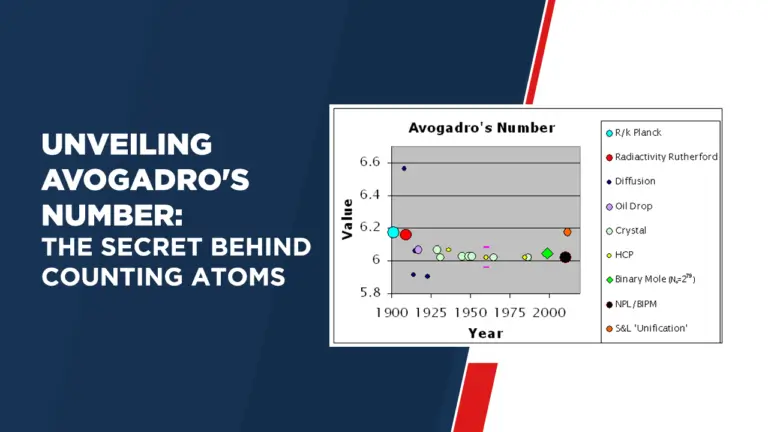

What is Avogadro’s Number (NA)

Avogadro’s number, denoted as NA, is an essential constant that indicates how many atoms, ions or molecules make up one mole of any substance. A mole is used as a unit for measuring amounts of chemical substances; Avogadro’s number connects this macroscopic amount with particles residing on microscopic scale. More precisely defined, Avogadro’s Number stands as equaling approximately 6.022 × 1023.

Value of Avogadro’s Number

The value of Avogadro’s number, 6.022 × 1023, may seem astonishingly large, it actually serves to show how minute individual atoms and molecules truly are – as well as illustrate how large-scale chemical operations operate where even minute amounts of substances contain an incredible array of particles.

Avogadro’s Number Units

Avogadro’s number is dimensionless, which allows it to act as an idealized number representing particle counts without having any units attached to it. Chemists utilize this characteristic of Avogadro’s Number in their calculations so as to bridge between macroscopic quantities such as grams and microscopic ones such as individual particles.

Define Avogadro’s Number

Simply, Avogadro’s number can be defined as the total number of atoms, ions or molecules present in one mole of a substance. Chemists use this definition to make connections between quantities of substances measured as moles and their composition of individual atomic or molecular entities.

How to Calculate Avogadro’s Number

Calculating Avogadro’s Number requires understanding the relationship between mass, molar mass and particles present in any substance. One mole of any given material contains Avogadro’s number of particles so you can calculate its moles by dividing its mass by its molar mass; then multiply this figure by Avogadro’s number to get your count of particles.

Conclusion

Avogadro’s number stands as an indispensable foundation of modern chemistry, acting as a link between macroscopic quantities and the subatomic world. While its value, which exceeds 1023, may seem daunting at first, its immense size demonstrates just how vast and vastly intricate our microscopic universe truly is. Understanding Avogadro’s number is more than an academic pursuit; understanding this formula unlocks knowledge about molecular interactions.

Mapping Chemistry’s DNA: Exploring the Secrets of the Valency Chart

Introduction:

In the realm of chemistry, understanding the valency of elements is crucial for comprehending their behavior in chemical reactions and their participation in forming compounds. Valency charts serve as indispensable tools for visualizing and interpreting these valence interactions. This article delves into the significance of valency, the construction of a full valency chart encompassing all elements, and the invaluable insights provided by these charts.

Unravelling Valency:

Valency refers to the combining capacity of an element, indicating the number of electrons an atom gains, loses, or shares when forming chemical compounds. Valence electrons, occupying the outermost electron shell, are key players in these interactions, defining the chemical behaviour of an element.

Constructing a Full Valency Chart:

A full valency chart systematically presents the valence electrons of all elements, allowing chemists to predict the possible oxidation states and bonding patterns. The periodic table guides the organisation of this chart, categorising elements by their atomic number and electron configuration.

Benefits of Valency Charts:

Valency charts offer several advantages:

Predicting Compound Formation:

Valency charts facilitate predicting how elements interact and form compounds based on their valence electrons.

Balancing Chemical Equations:

Understanding valency helps in balancing chemical equations by ensuring the conservation of atoms and electrons.

Determining Oxidation States:

Valency charts assist in identifying the possible oxidation states of elements in compounds.

Classifying Elements:

Valency charts aid in classifying elements as metals, nonmetals, or metalloids based on their electron configuration.

Navigating the Valency Chart:

When using a valency chart, follow these steps:

Locate the Element:

Find the element in the chart based on its atomic number.

Identify Valence Electrons:

Observe the group number (column) to determine the number of valence electrons.

Predict Ionic Charges:

For main group elements, the valence electrons often dictate the ionic charge when forming ions.

Valency Chart and Periodic Trends:

Valency charts reflect periodic trends, such as the increase in valence electrons from left to right across a period and the tendency of main group elements to have a valency equal to their group number.

Conclusion:

Valency charts serve as compasses, guiding chemists through the intricate landscape of element interactions. By providing a visual representation of valence electrons and potential bonding patterns, these charts empower scientists to predict reactions, balance equations, and grasp the nuances of chemical behavior. In the pursuit of understanding the building blocks of matter, valency charts stand as essential tools, enabling us to navigate the complex world of chemistry with confidence and precision.

English concepts:

Clear pronunciation is a cornerstone of effective communication. While vocabulary and grammar are essential, the physical aspects of speech production, particularly mouth and tongue positioning, play a critical role in producing accurate sounds. Understanding and practicing proper articulation techniques can significantly enhance clarity and confidence in speech.

How Speech Sounds Are Produced

Speech sounds are created by the interaction of various speech organs, including the lips, tongue, teeth, and vocal cords. The tongue’s positioning and movement, combined with the shape of the mouth, determine the quality and accuracy of sounds. For example, vowels are shaped by the tongue’s height and position in the mouth, while consonants involve specific points of contact between the tongue and other parts of the oral cavity.

The Role of the Tongue

- Vowel Sounds:

- The tongue’s position is critical in forming vowels. For instance, high vowels like /iː/ (“beat”) require the tongue to be raised close to the roof of the mouth, while low vowels like /\u00e6/ (“bat”) require the tongue to be positioned lower.

- Front vowels, such as /e/ (“bet”), are produced when the tongue is closer to the front of the mouth, whereas back vowels like /uː/ (“boot”) involve the tongue retracting toward the back.

- Consonant Sounds:

- The tongue’s precise placement is crucial for consonants. For example, the /t/ and /d/ sounds are formed by the tongue touching the alveolar ridge (the ridge behind the upper teeth), while the /k/ and /g/ sounds are made with the back of the tongue against the soft palate.

- Sounds like /\u0283/ (“sh” as in “she”) require the tongue to be slightly raised and positioned near the hard palate without touching it.

The Role of the Mouth

- Lip Movement:

- Rounded vowels like /oʊ/ (“go”) involve the lips forming a circular shape, while unrounded vowels like /\u0251ː/ (“father”) keep the lips relaxed.

- Labial consonants, such as /p/, /b/, and /m/, rely on the lips coming together or closing.

- Jaw Position:

- The jaw’s openness affects the production of sounds. For example, open vowels like /\u0251ː/ require a wider jaw opening compared to close vowels like /iː/.

Improving Pronunciation Through Positioning

- Mirror Practice: Observe your mouth and tongue movements in a mirror while speaking. This visual feedback can help you make necessary adjustments.

- Phonetic Exercises: Practice individual sounds by focusing on the tongue and mouth’s required positions. For instance, repeat minimal pairs like “ship” and “sheep” to differentiate between /\u026a/ and /iː/.

- Use Pronunciation Guides: Resources like the International Phonetic Alphabet (IPA) provide detailed instructions on mouth and tongue positioning for each sound.

- Seek Feedback: Work with a language coach or use pronunciation apps that provide real-time feedback on your articulation.

Common Challenges and Solutions

- Retroflex Sounds: Some learners struggle with retroflex sounds, where the tongue curls back slightly. Practicing these sounds slowly and with guidance can improve accuracy.

- Th Sounds (/\u03b8/ and /\u00f0/): Non-native speakers often find it challenging to position the tongue between the teeth for these sounds. Practice holding the tongue lightly between the teeth and exhaling.

- Consistency: Regular practice is essential. Even small daily efforts can lead to noticeable improvements over time.

Conclusion

Clear pronunciation is not merely about knowing the right words but also mastering the physical aspects of speech. Proper mouth and tongue positioning can significantly enhance your ability to articulate sounds accurately and communicate effectively. By focusing on these elements and practicing consistently, you can achieve greater clarity and confidence in your speech.

English, as a global language, exhibits a remarkable diversity of accents that reflect the rich cultural and geographical contexts of its speakers. Regional accents not only shape the way English is pronounced but also contribute to the unique identity of communities. From the crisp enunciation of British Received Pronunciation (RP) to the melodic tones of Indian English, regional accents significantly influence how English sounds across the world.

What Are Regional Accents?

A regional accent is the distinct way in which people from a specific geographical area pronounce words. Factors like local dialects, historical influences, and contact with other languages contribute to the development of these accents. For instance, the Irish English accent retains traces of Gaelic phonetics, while American English shows influences from Spanish, French, and Indigenous languages.

Examples of Regional Accents in English

- British Accents:

- Received Pronunciation (RP): Often associated with formal British English, RP features clear enunciation and is commonly used in media and education.

- Cockney: This London-based accent drops the “h” sound (e.g., “house” becomes “‘ouse”) and uses glottal stops (e.g., “bottle” becomes “bo’le”).

- Scouse: Originating from Liverpool, this accent is characterized by its nasal tone and unique intonation patterns.

- American Accents:

- General American (GA): Considered a neutral accent in the U.S., GA lacks strong regional markers like “r-dropping” or vowel shifts.

- Southern Drawl: Found in the southern United States, this accent elongates vowels and has a slower speech rhythm.

- New York Accent: Known for its “r-dropping” (e.g., “car” becomes “cah”) and distinct pronunciation of vowels, like “coffee” pronounced as “caw-fee.”

- Global English Accents:

- Australian English: Features a unique vowel shift, where “day” may sound like “dye.”

- Indian English: Retains features from native languages, such as retroflex consonants and a syllable-timed rhythm.

- South African English: Combines elements of British English with Afrikaans influences, producing distinctive vowel sounds.

Impact of Regional Accents on Communication

- Intelligibility: While accents enrich language, they can sometimes pose challenges in global communication. For example, non-native speakers might struggle with understanding rapid speech or unfamiliar intonation patterns.

- Perceptions and Bias: Accents can influence how speakers are perceived, often unfairly. For instance, some accents are associated with prestige, while others may face stereotypes. Addressing these biases is crucial for fostering inclusivity.

- Cultural Identity: Accents serve as markers of cultural identity, allowing individuals to connect with their heritage. They also add color and diversity to the English language.

Embracing Accent Diversity

- Active Listening: Exposure to different accents through media, travel, or conversation helps improve understanding and appreciation of linguistic diversity.

- Pronunciation Guides: Resources like the International Phonetic Alphabet (IPA) can aid in recognizing and reproducing sounds from various accents.

- Celebrate Differences: Recognizing that there is no “correct” way to speak English encourages mutual respect and reduces linguistic prejudice.

Conclusion

Regional accents are a testament to the adaptability and richness of English as a global language. They highlight the influence of history, culture, and geography on pronunciation, making English a dynamic and evolving means of communication. By embracing and respecting these differences, we can better appreciate the beauty of linguistic diversity.

Language learners and linguists alike rely on the International Phonetic Alphabet (IPA) as an essential tool to understand and master pronunciation. Developed in the late 19th century, the IPA provides a consistent system of symbols representing the sounds of spoken language. It bridges the gap between spelling and speech, offering clarity and precision in a world of linguistic diversity.

What is the IPA?

The IPA is a standardized set of symbols that represent each sound, or phoneme, of human speech. Unlike regular alphabets tied to specific languages, the IPA is universal, transcending linguistic boundaries. It encompasses vowels, consonants, suprasegmentals (like stress and intonation), and diacritics to convey subtle sound variations. For instance, the English word “cat” is transcribed as /kæt/, ensuring its pronunciation is clear to anyone familiar with the IPA, regardless of their native language.

Why is the IPA Important?

The IPA is invaluable in addressing the inconsistencies of English spelling. For example, consider the words “though,” “through,” and “tough.” Despite their similar spellings, their pronunciations—/\u03b8o\u028a/, /\u03b8ru\u02d0/, and /tʌf/—vary significantly. The IPA eliminates confusion by focusing solely on sounds, not spelling.

Additionally, the IPA is a cornerstone for teaching and learning pronunciation in foreign languages. By understanding the symbols, learners can accurately replicate sounds that do not exist in their native tongue. For instance, French nasal vowels or the German “/\u03c7/” sound can be practiced effectively using IPA transcriptions.

Applications of the IPA in Learning Pronunciation

- Consistency Across Languages: The IPA provides a consistent method for learning pronunciation, regardless of the language. For example, the symbol /\u0259/ represents the schwa sound in English, as in “sofa,” and also applies to other languages like French and German.

- Corrective Feedback: Teachers and learners can use the IPA to identify specific pronunciation errors. For instance, an English learner mispronouncing “think” as “sink” can see the difference between /\u03b8/ (voiceless dental fricative) and /s/ (voiceless alveolar fricative).

- Improved Listening Skills: Familiarity with the IPA sharpens listening comprehension. Recognizing sounds and their corresponding symbols trains learners to distinguish subtle differences, such as the distinction between /iː/ (“sheep”) and /\u026a/ (“ship”) in English.

- Self-Study Tool: Many dictionaries include IPA transcriptions, enabling learners to practice pronunciation independently. Online resources, such as Forvo and YouTube tutorials, often incorporate IPA to demonstrate sounds visually and audibly.

How to Learn the IPA

- Start Small: Begin with common sounds in your target language and gradually expand to more complex symbols.

- Use Visual Aids: IPA charts, available online, visually group sounds based on their articulation (e.g., plosives, fricatives, and vowels).

- Practice Regularly: Regular exposure to IPA transcriptions and practice with native speakers or recordings helps reinforce learning.

- Seek Professional Guidance: Enroll in language classes or consult linguists familiar with the IPA for advanced instruction.

Conclusion

The International Phonetic Alphabet is a powerful tool that simplifies the complex relationship between speech and writing. Its precision and universality make it an indispensable resource for language learners, educators, and linguists. By embracing the IPA, you can unlock the intricacies of pronunciation and enhance your ability to communicate effectively across languages.

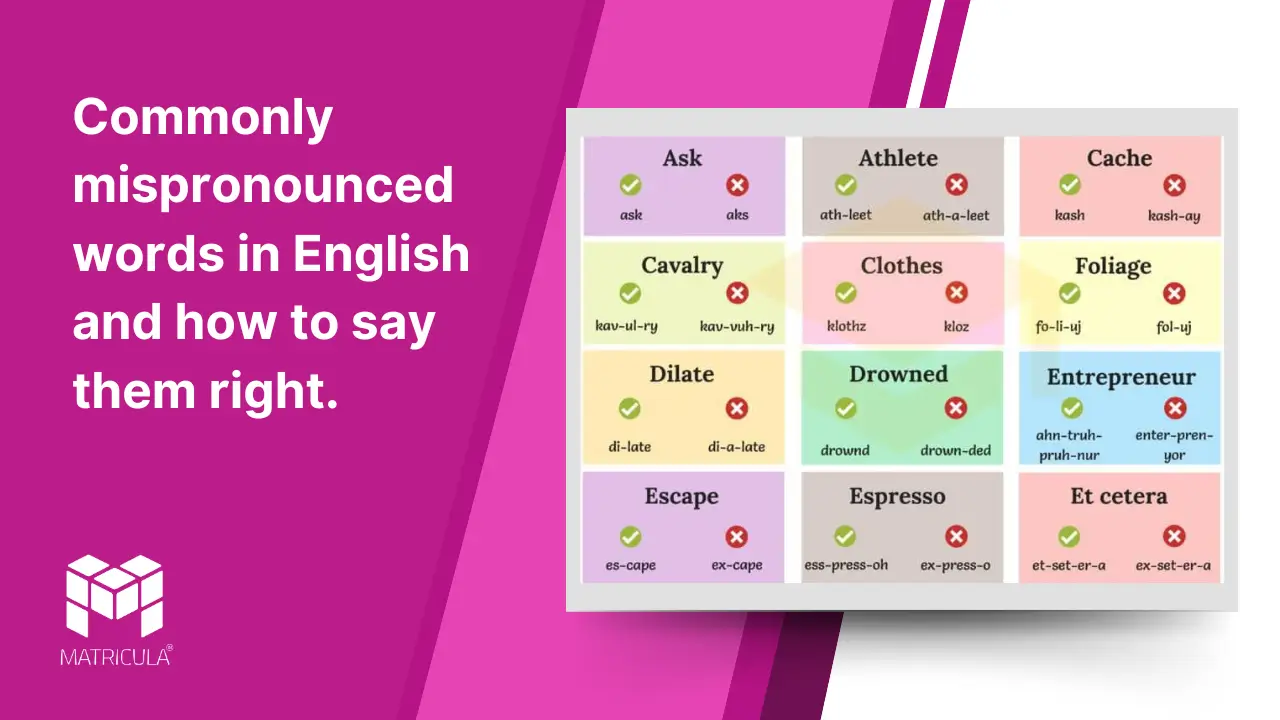

English, with its vast vocabulary and roots in multiple languages, often leaves even native speakers grappling with correct pronunciations. Mispronunciations can stem from regional accents, linguistic influences, or simply the irregularities of English spelling. Here, we explore some commonly mispronounced words and provide tips to articulate them correctly.

1. Pronunciation

Common Mistake: Saying “pro-noun-ciation”

Correct: “pruh-nun-see-ay-shun”

This word ironically trips people up. Remember, it comes from the root “pronounce,” but the vowel sounds shift in “pronunciation.”

2. Mischievous

Common Mistake: Saying “mis-chee-vee-us”

Correct: “mis-chuh-vus”

This three-syllable word often gains an unnecessary extra syllable. Keep it simple!

3. Espresso

Common Mistake: Saying “ex-press-o”

Correct: “ess-press-o”

There is no “x” in this caffeinated delight. The pronunciation reflects its Italian origin.

4. February

Common Mistake: Saying “feb-yoo-air-ee”

Correct: “feb-roo-air-ee”

The first “r” is often dropped in casual speech, but pronouncing it correctly shows attention to detail.

5. Library

Common Mistake: Saying “lie-berry”

Correct: “lie-bruh-ree”

Avoid simplifying the word by dropping the second “r.” Practice enunciating all the syllables.

6. Nuclear

Common Mistake: Saying “nuke-yoo-lur”

Correct: “new-klee-ur”

This word, often heard in political discussions, has a straightforward two-syllable pronunciation.

7. Almond

Common Mistake: Saying “al-mond”

Correct: “ah-mund” (in American English) or “al-mund” (in British English)

Regional differences exist, but in American English, the “l” is typically silent.

8. Often

Common Mistake: Saying “off-ten”

Correct: “off-en”

Historically, the “t” was pronounced, but modern English favors the silent “t” in most accents.

9. Salmon

Common Mistake: Saying “sal-mon”

Correct: “sam-un”

The “l” in “salmon” is silent. Think of “salmon” as “sam-un.”

10. Et cetera

Common Mistake: Saying “ek-cetera”

Correct: “et set-er-uh”

Derived from Latin, this phrase means “and the rest.” Pronouncing it correctly can lend sophistication to your speech.

Tips to Avoid Mispronunciation:

- Listen and Repeat: Exposure to correct pronunciations through audiobooks, podcasts, or conversations with fluent speakers can help.

- Break It Down: Divide challenging words into syllables and practice saying each part.

- Use Online Resources: Websites like Forvo and dictionary apps often provide audio examples of words.

- Ask for Help: If unsure, don’t hesitate to ask someone knowledgeable or consult a reliable source.

Mastering the correct pronunciation of tricky words takes practice and patience, but doing so can significantly enhance your confidence and communication skills. Remember, every misstep is a stepping stone toward becoming more fluent!

Speaking English with a foreign accent is a natural part of learning the language, as it reflects your linguistic background. However, some individuals may wish to reduce their accent to improve clarity or feel more confident in communication. Here are practical tips to help you minimize a foreign accent in English.

1. Listen Actively

One of the most effective ways to improve pronunciation is by listening to native speakers. Pay attention to how they pronounce words, their intonation, and rhythm. Watch movies, podcasts, or interviews in English and try to imitate the way speakers articulate words. Apps like YouTube or language learning platforms often provide valuable audio resources.

2. Learn the Sounds of English

English has a variety of sounds that may not exist in your native language. Familiarize yourself with these sounds using tools like the International Phonetic Alphabet (IPA). For example, practice distinguishing between similar sounds, such as /iː/ (“sheep”) and /\u026a/ (“ship”).

3. Practice with Minimal Pairs

Minimal pairs are words that differ by only one sound, such as “bat” and “pat” or “thin” and “tin.” Practicing these pairs can help you fine-tune your ability to hear and produce distinct English sounds.

4. Focus on Stress and Intonation

English is a stress-timed language, meaning certain syllables are emphasized more than others. Incorrect stress placement can make speech difficult to understand. For instance, “record” as a noun stresses the first syllable (RE-cord), while the verb stresses the second (re-CORD). Practice using the correct stress and pay attention to the natural rise and fall of sentences.

5. Slow Down and Enunciate

Speaking too quickly can amplify an accent and make it harder to pronounce words clearly. Slow down and focus on enunciating each syllable. Over time, clarity will become second nature, even at a normal speaking pace.

6. Use Pronunciation Apps and Tools

Modern technology offers numerous tools to help with pronunciation. Apps like Elsa Speak, Speechling, or even Google Translate’s audio feature can provide instant feedback on your speech. Use these tools to compare your pronunciation to that of native speakers.

7. Work with a Speech Coach or Tutor

A professional tutor can pinpoint areas where your pronunciation deviates from standard English and provide targeted exercises to address them. Many language tutors specialize in accent reduction and can help accelerate your progress.

8. Record Yourself

Hearing your own voice is a powerful way to identify areas for improvement. Record yourself reading passages or practicing conversations, then compare your speech to native speakers’ recordings.

9. Practice Daily

Consistency is key to reducing an accent. Dedicate time each day to practicing pronunciation. Whether through speaking, listening, or shadowing (repeating immediately after a speaker), regular practice builds muscle memory for English sounds.

10. Be Patient and Persistent

Reducing an accent is a gradual process that requires dedication. Celebrate small improvements and focus on becoming more comprehensible rather than achieving perfection.

Conclusion

While a foreign accent is part of your linguistic identity, reducing it can help you communicate more effectively in English. By actively listening, practicing consistently, and using available tools and resources, you can achieve noticeable improvements in your pronunciation. Remember, the goal is clarity and confidence, not eliminating your unique voice.

Geography concepts:

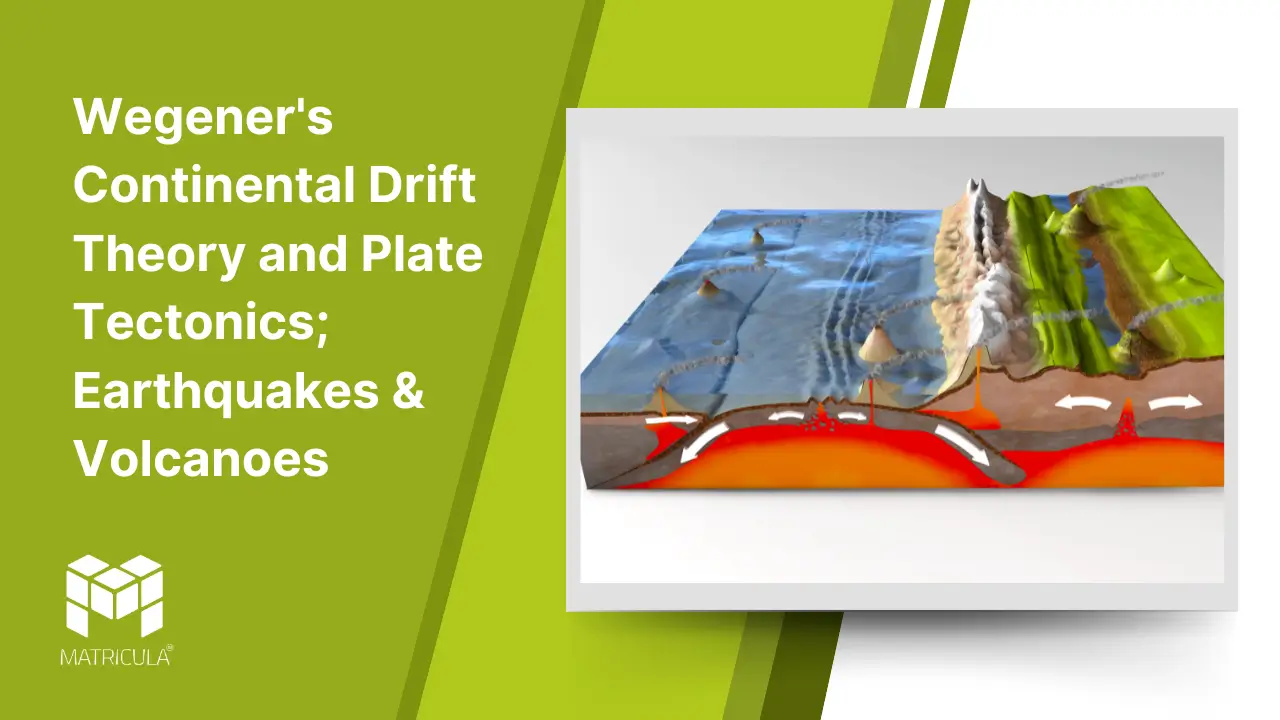

The Earth’s surface, dynamic and ever-changing, is shaped by powerful forces operating beneath the crust. Among the key theories explaining these processes are Alfred Wegener’s Continental Drift Theory and the modern understanding of Plate Tectonics. These concepts are fundamental to understanding earthquakes and volcanoes, two of the most dramatic natural phenomena.

Wegener’s Continental Drift Theory

In 1912, Alfred Wegener proposed the Continental Drift Theory, suggesting that the continents were once joined together in a single supercontinent called “Pangaea.” Over millions of years, Pangaea fragmented, and the continents drifted to their current positions.

Wegener supported his hypothesis with several lines of evidence:

- Fossil Correlation: Identical fossils of plants and animals, such as Mesosaurus and Glossopteris, were found on continents now separated by oceans.

- Geological Similarities: Mountain ranges and rock formations on different continents matched perfectly, such as the Appalachian Mountains in North America aligning with mountain ranges in Scotland.

- Climate Evidence: Glacial deposits in regions now tropical and coal deposits in cold areas suggested significant shifts in continental positioning.

Despite its compelling evidence, Wegener’s theory was not widely accepted during his lifetime due to the lack of a mechanism explaining how continents moved.

Plate Tectonics: The Modern Perspective

The theory of Plate Tectonics, developed in the mid-20th century, provided the mechanism that Wegener’s theory lacked. The Earth’s lithosphere is divided into large, rigid plates that float on the semi-fluid asthenosphere beneath. These plates move due to convection currents in the mantle, caused by heat from the Earth’s core.

Plate Boundaries

Divergent Boundaries: Plates move apart, forming new crust as magma rises to the surface. Example: The Mid-Atlantic Ridge.

Convergent Boundaries: Plates collide, leading to subduction (one plate sinking beneath another) or the formation of mountain ranges. Example: The Himalayas.

Transform Boundaries: Plates slide past each other horizontally, causing earthquakes. Example: The San Andreas Fault.

Earthquakes

Earthquakes occur when stress builds up along plate boundaries and is suddenly released, causing the ground to shake. They are measured using the Richter scale or the moment magnitude scale, and their epicenters and depths are crucial to understanding their impacts.

Types of Earthquakes

Tectonic Earthquakes: Caused by plate movements at boundaries.

Volcanic Earthquakes: Triggered by volcanic activity.

Human-Induced Earthquakes: Resulting from mining, reservoir-induced seismicity, or other human activities.

Volcanoes

Volcanoes are formed when magma from the Earth’s mantle reaches the surface. Their occurrence is closely linked to plate boundaries:

Subduction Zones: As one plate subducts, it melts and forms magma, leading to volcanic eruptions. Example: The Pacific Ring of Fire.

Divergent Boundaries: Magma emerges where plates pull apart, as seen in Iceland.

Hotspots: Volcanoes form over mantle plumes, independent of plate boundaries. Example: Hawaii.

Types of Volcanoes

Shield Volcanoes: Broad and gently sloping, with non-explosive eruptions.

Composite Volcanoes: Steep-sided and explosive, formed by alternating layers of lava and ash.

Cinder Cone Volcanoes: Small, steep, and composed of volcanic debris.

The Earth, a dynamic and complex planet, has a layered structure that plays a crucial role in shaping its physical characteristics and geological processes. These layers are distinguished based on their composition, state, and physical properties. Understanding the Earth’s structure is fundamental for studying phenomena such as earthquakes, volcanism, and plate tectonics.

The Earth’s Layers

The Earth is composed of three main layers: the crust, the mantle, and the core. Each layer is unique in its composition and function.

1. The Crust

The crust is the outermost and thinnest layer of the Earth. It is divided into two types:

- Continental Crust: Thicker (30-70 km), less dense, and composed mainly of granite.

- Oceanic Crust: Thinner (5-10 km), denser, and primarily composed of basalt.

The crust forms the Earth’s surface, including continents and ocean floors. It is broken into tectonic plates that float on the underlying mantle.

2. The Mantle

Beneath the crust lies the mantle, which extends to a depth of about 2,900 km. It constitutes about 84% of the Earth’s volume. The mantle is primarily composed of silicate minerals rich in iron and magnesium.

The mantle is subdivided into:

- Upper Mantle: Includes the lithosphere (rigid outer part) and the asthenosphere (semi-fluid layer that allows plate movement).

- Lower Mantle: More rigid due to increased pressure but capable of slow flow.

Convection currents in the mantle drive the movement of tectonic plates, leading to geological activity like earthquakes and volcanic eruptions.

3. The Core

The core, the innermost layer, is divided into two parts:

- Outer Core: A liquid layer composed mainly of iron and nickel. It extends from 2,900 km to 5,150 km below the surface. The movement of the liquid outer core generates the Earth’s magnetic field.

- Inner Core: A solid sphere made primarily of iron and nickel, with a radius of about 1,220 km. Despite the extreme temperatures, the inner core remains solid due to immense pressure.

Transition Zones

The boundaries between these layers are marked by distinct changes in seismic wave velocities:

- Mohorovičić Discontinuity (Moho): The boundary between the crust and the mantle.

- Gutenberg Discontinuity: The boundary between the mantle and the outer core.

- Lehmann Discontinuity: The boundary between the outer core and the inner core.

Significance of the Earth’s Structure

- Seismic Studies: The study of seismic waves helps scientists understand the Earth’s internal structure and composition.

- Plate Tectonics: Knowledge of the lithosphere and asthenosphere explains plate movements and related phenomena like earthquakes and mountain building.

- Magnetic Field: The outer core’s dynamics are crucial for generating the Earth’s magnetic field, which protects the planet from harmful solar radiation.

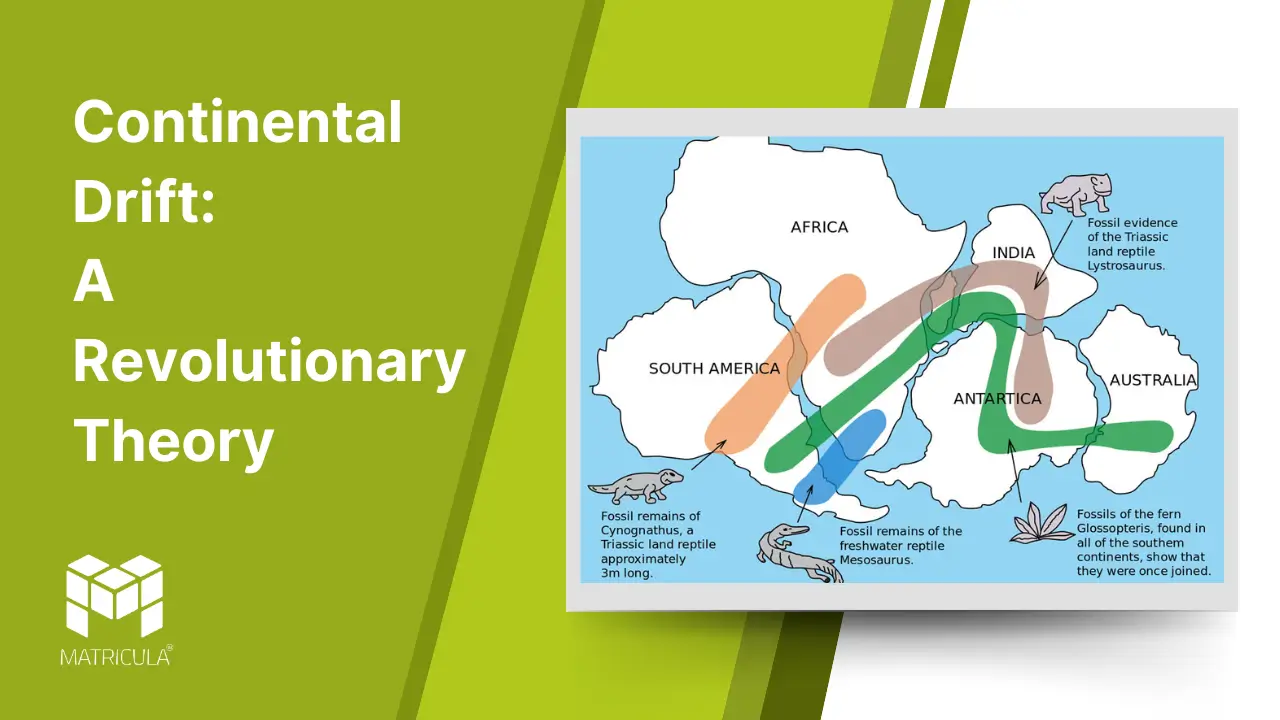

Continental drift is a scientific theory that revolutionized our understanding of Earth’s geography and geological processes. Proposed by German meteorologist and geophysicist Alfred Wegener in 1912, the theory posits that continents were once joined together in a single landmass and have since drifted apart over geological time.

The Origin of Continental Drift Theory

Alfred Wegener introduced the idea of a supercontinent called Pangaea, which existed around 300 million years ago. Over time, this landmass fragmented and its pieces drifted to their current positions. Wegener’s theory challenged the prevailing notion that continents and oceans had remained fixed since the Earth’s formation.

Evidence Supporting Continental Drift

Fit of the Continents: The coastlines of continents like South America and Africa fit together like puzzle pieces, suggesting they were once joined.

Fossil Evidence: Identical fossils of plants and animals, such as Mesosaurus (a freshwater reptile), have been found on continents now separated by oceans. This indicates that these continents were once connected.

Geological Similarities: Mountain ranges, such as the Appalachian Mountains in North America and the Caledonian Mountains in Europe, share similar rock compositions and structures, hinting at a shared origin.

Paleoclimatic Evidence: Evidence of glaciation, such as glacial striations, has been found in regions that are now tropical, like India and Africa, suggesting these regions were once closer to the poles.

Challenges to Wegener’s Theory

Despite its compelling evidence, Wegener’s theory faced criticism because he could not explain the mechanism driving the continents’ movement. At the time, the scientific community lacked knowledge about the Earth’s mantle and plate tectonics, which are now understood to be key to continental movement.

Link to Plate Tectonics

The theory of plate tectonics, developed in the mid-20th century, provided the missing mechanism for continental drift. It describes the Earth’s lithosphere as divided into tectonic plates that float on the semi-fluid asthenosphere beneath them. Convection currents in the mantle drive the movement of these plates, causing continents to drift, collide, or separate.

Impact of Continental Drift

- Formation of Landforms: The drifting of continents leads to the creation of mountain ranges, ocean basins, and rift valleys.

- Earthquakes and Volcanoes: The interaction of tectonic plates at their boundaries results in seismic and volcanic activity.

- Biogeography: The movement of continents explains the distribution of species and the evolution of unique ecosystems.

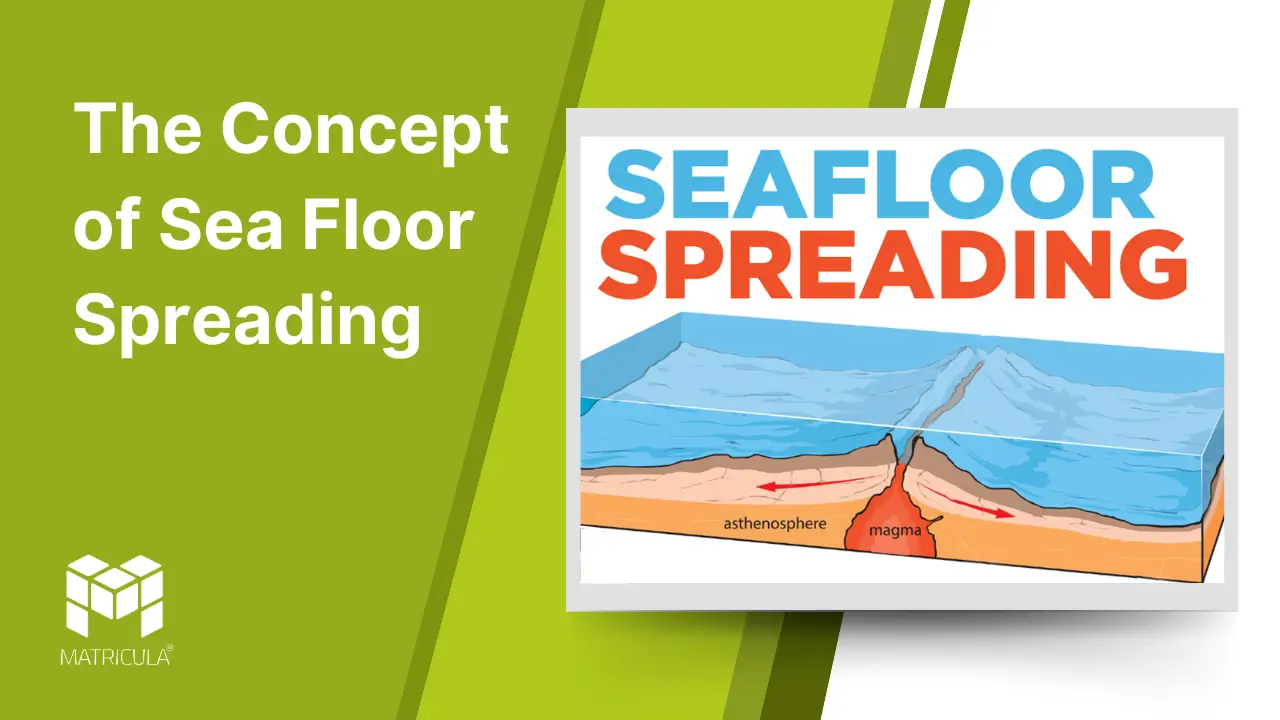

Sea floor spreading is a fundamental process in plate tectonics that explains the formation of a new oceanic crust and the dynamic nature of Earth’s lithosphere. First proposed by Harry Hess in the early 1960s, this concept revolutionized our understanding of ocean basins and their role in shaping Earth’s geological features.

What is Sea Floor Spreading?

Sea floor spreading occurs at mid-ocean ridges, which are underwater mountain ranges that form along divergent plate boundaries. At these ridges, magma rises from the mantle, cools, and solidifies to create a new oceanic crust. As this new crust forms, it pushes the older crust away from the ridge, causing the ocean floor to expand.

This continuous process is driven by convection currents in the mantle, which transport heat and material from Earth’s interior to its surface.

Key Features of Sea Floor Spreading

- Mid-Ocean Ridges: These are the sites where sea floor spreading begins. Examples include the Mid-Atlantic Ridge and the East Pacific Rise. These ridges are characterized by volcanic activity and high heat flow.

- Magnetic Striping: As magma solidifies at mid-ocean ridges, iron-rich minerals within it align with Earth’s magnetic field. Over time, the magnetic field reverses, creating alternating magnetic stripes on either side of the ridge. These stripes serve as a record of Earth’s magnetic history and provide evidence for sea floor spreading.

- Age of the Ocean Floor: The age of the oceanic crust increases with distance from the mid-ocean ridge. The youngest rocks are found at the ridge, while the oldest rocks are located near subduction zones where the oceanic crust is recycled back into the mantle.

Evidence Supporting Sea Floor Spreading

Magnetic Anomalies: The symmetrical pattern of magnetic stripes on either side of mid-ocean ridges corresponds to Earth’s magnetic reversals, confirming the creation and movement of oceanic crust.

Seafloor Topography: The discovery of mid-ocean ridges and deep-sea trenches provided physical evidence for the process of spreading and subduction.

Ocean Drilling: Samples collected from the ocean floor show that sediment thickness and crust age increases with distance from mid-ocean ridges, supporting the idea of continuous crust formation and movement.

Heat Flow Measurements: Elevated heat flow near mid-ocean ridges indicates active magma upwelling and crust formation.

Role in Plate Tectonics

Sea floor spreading is integral to the theory of plate tectonics, as it explains the movement of oceanic plates. The process creates new crust at divergent boundaries and drives plate motion, leading to interactions at convergent boundaries (subduction zones) and transform boundaries (faults).

Impact on Earth’s Geology

Creation of Ocean Basins: Sea floor spreading shapes the structure of ocean basins, influencing global geography over millions of years.

Earthquakes and Volcanism: The process generates earthquakes and volcanic activity at mid-ocean ridges and subduction zones.

Continental Drift: Sea floor spreading provides a mechanism for continental drift, explaining how continents move apart over time.

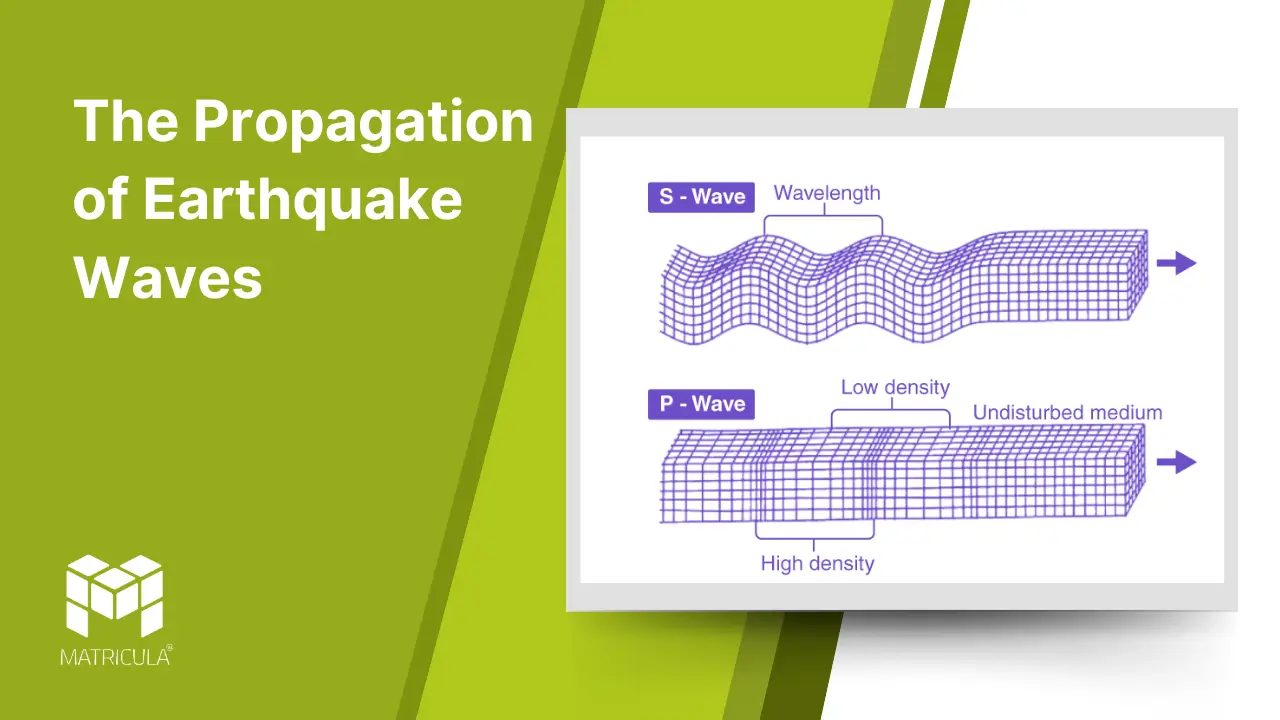

Earthquakes, one of the most striking natural phenomena, release energy in the form of seismic waves that travel through the Earth. The study of these waves is vital to understanding the internal structure of our planet and assessing the impacts of seismic activity. Earthquake waves, classified into body waves and surface waves, exhibit distinct characteristics and behaviors as they propagate through different layers of the Earth.

Body Waves

Body waves travel through the Earth’s interior and are of two main types: primary waves (P-waves) and secondary waves (S-waves).

P-Waves (Primary Waves)

- Characteristics: P-waves are compressional or longitudinal waves, causing particles in the material they pass through to vibrate in the same direction as the wave’s movement.

- Speed: They are the fastest seismic waves, traveling at speeds of 5-8 km/s in the Earth’s crust and even faster in denser materials.

- Medium: P-waves can travel through solids, liquids, and gases, making them the first waves to be detected by seismographs during an earthquake.

S-Waves (Secondary Waves)

- Characteristics: S-waves are shear or transverse waves, causing particles to move perpendicular to the wave’s direction of travel.

- Speed: They are slower than P-waves, traveling at about 3-4 km/s in the Earth’s crust.

- Medium: S-waves can only move through solids, as liquids and gases do not support shear stress.

- Significance: The inability of S-waves to pass through the Earth’s outer core provides evidence of its liquid nature.

Surface Waves

Surface waves travel along the Earth’s crust and are slower than body waves. However, they often cause the most damage during earthquakes due to their high amplitude and prolonged shaking. There are two main types of surface waves: Love waves and Rayleigh waves.

Love Waves

- Characteristics: Love waves cause horizontal shearing of the ground, moving the surface side-to-side.

- Impact: They are highly destructive to buildings and infrastructure due to their horizontal motion.

Rayleigh Waves

- Characteristics: Rayleigh waves generate a rolling motion, combining both vertical and horizontal ground movement.

- Appearance: Their motion resembles ocean waves and can be felt at greater distances from the earthquake’s epicenter.

Propagation Through the Earth

The behavior of earthquake waves provides invaluable information about the Earth’s internal structure:

- Reflection and Refraction: As seismic waves encounter boundaries between different materials, such as the crust and mantle, they reflect or refract, altering their speed and direction.

- Shadow Zones: P-waves and S-waves create shadow zones—regions on the Earth’s surface where seismic waves are not detected—offering clues about the composition and state of the Earth’s interior.

- Wave Speed Variations: Changes in wave velocity reveal differences in density and elasticity of the Earth’s layers.

History concepts:

François Bernier, a French physician and traveler from the 17th century, is often remembered not only for his medical expertise but also for his distinctive approach to anthropology and his contribution to the understanding of race and society. His unique career and pioneering thoughts have left an indelible mark on both medical history and social science.

Early Life and Education

Born in 1625 in the small town of Bergerac in southwestern France, François Bernier was initially drawn to the medical field. He studied at the University of Montpellier, one of the most renowned medical schools of the time, where he earned his degree in medicine. However, it was not just the practice of medicine that fascinated Bernier; his intellectual curiosity stretched far beyond the confines of the classroom, drawing him to explore various cultures and societies across the world.

A Journey Beyond Medicine

In 1653, Bernier left France for the Mughal Empire, one of the most powerful and culturally rich regions of the time, as a personal physician to the Mughal emperor’s court. His experiences in India greatly influenced his thinking and the trajectory of his career. During his time in the subcontinent, Bernier not only treated the emperor’s court but also observed the vast cultural and racial diversity within the empire.

His observations were not just medical but also social and anthropological, laying the foundation for his most famous work, Travels in the Mughal Empire. In his book, Bernier provided a detailed account of the Mughal Empire’s political structure, the customs of its people, and the unique geography of the region. However, it was his discussions on race and human classification that were most groundbreaking.

Bernier’s View on Race

François Bernier’s thoughts on race were far ahead of his time. In a work published in 1684, Nouvelle Division de la Terre par les Différentes Especes ou Races qui l’Habitent (A New Division of the Earth by the Different Species or Races that Inhabit It), Bernier proposed a classification of humans based on physical characteristics, which is considered one of the earliest attempts at racial categorization in scientific discourse.

Bernier divided humanity into four major “races,” a concept he introduced to explain the differences he observed in people across different parts of the world. These included the Europeans, the Africans, the Asians, and the “Tartars” or people from the Mongol region. While his ideas on race are considered outdated and problematic today, they were groundbreaking for their time and laid the groundwork for later anthropological and racial theories.

Legacy and Influence

Bernier’s contributions went beyond the realm of medicine and anthropology. His writings were influential in European intellectual circles and contributed to the growing European interest in the non-Western world. His observations, especially regarding the Indian subcontinent, provided European readers with a new understanding of distant lands and cultures. In the context of medical history, his role as a physician in the Mughal court also underscores the importance of medical exchanges across different cultures during the 17th century.

François Bernier died in 1688, but his legacy continued to shape the fields of medicine, anthropology, and colonial studies long after his death. Although his views on race would be critically examined and challenged in the centuries to follow, his adventurous spirit and intellectual curiosity left an indelible mark on the study of human diversity and the interconnectedness of the world.

In the annals of history, few individuals have demonstrated the intellectual curiosity and openness to other cultures as vividly as Al-Biruni. A Persian polymath born in 973 CE, Al-Biruni is celebrated for his pioneering contributions to fields such as astronomy, mathematics, geography, and anthropology. Among his most remarkable achievements is his systematic study of India, captured in his seminal work, Kitab al-Hind (The Book of India). This text is a testament to Al-Biruni’s efforts to make sense of a culture and tradition vastly different from his own—what he referred to as the “Sanskritic tradition.”

Encountering an “Alien World”

Al-Biruni’s journey to India was a consequence of the conquests of Mahmud of Ghazni, whose campaigns brought the scholar into contact with the Indian subcontinent. Rather than viewing India solely through the lens of conquest, Al-Biruni sought to understand its intellectual and cultural heritage. His approach was one of immersion: he studied Sanskrit, the classical language of Indian scholarship, and engaged deeply with Indian texts and traditions.

This effort marked Al-Biruni as a unique figure in the cross-cultural exchanges of his time. Where others may have dismissed or misunderstood India’s complex systems of thought, he sought to comprehend them on their own terms, recognizing the intrinsic value of Indian philosophy, science, and spirituality.

Decoding the Sanskritic Tradition

The Sanskritic tradition, encompassing India’s rich repository of texts in philosophy, religion, astronomy, and mathematics, was largely inaccessible to outsiders due to its linguistic and cultural complexity. Al-Biruni overcame these barriers by studying key Sanskrit texts like the Brahmasphutasiddhanta of Brahmagupta, a seminal work on astronomy and mathematics.

In Kitab al-Hind, Al-Biruni systematically analyzed Indian cosmology, religious practices, and societal norms. He compared Indian astronomy with the Ptolemaic system prevalent in the Islamic world, highlighting areas of convergence and divergence. He also explored the philosophical underpinnings of Indian religions such as Hinduism, Buddhism, and Jainism, offering detailed accounts of their doctrines, rituals, and scriptures.

What set Al-Biruni apart was his objectivity. Unlike many medieval accounts, his descriptions avoided denigration or stereotyping. He acknowledged the strengths and weaknesses of Indian thought without imposing his own cultural biases, striving for an intellectual honesty that remains a model for cross-cultural understanding.

Bridging Cultures Through Scholarship

Al-Biruni’s work was not merely an intellectual exercise but a bridge between civilizations. By translating and explaining Indian ideas in terms familiar to Islamic scholars, he facilitated a dialogue between two great intellectual traditions. His observations introduced the Islamic world to Indian advances in mathematics, including concepts of zero and decimal notation, which would later influence global scientific progress.

Moreover, his nuanced portrayal of Indian culture countered the simplistic narratives of foreign conquest, offering a more empathetic and respectful view of a complex society.

Legacy and Relevance

Al-Biruni’s approach to the Sanskritic tradition underscores the timeless value of intellectual curiosity, humility, and cultural exchange. His work demonstrates that understanding an “alien world” requires not just knowledge but also respect for its inherent logic and values. In a world increasingly defined by globalization, his legacy offers a compelling blueprint for navigating cultural diversity with insight and empathy.

Al-Biruni remains a shining example of how scholarship can transcend the boundaries of language, religion, and geography, enriching humanity’s collective understanding of itself.

The social fabric of historical societies often reflects the complex interplay of power, gender, and labor. In this context, the lives of women slaves, the practice of Sati, and the conditions of laborers serve as poignant examples of systemic inequalities and cultural practices that shaped historical societies, particularly in the Indian subcontinent and beyond.

Women Slaves: Instruments of Power and Oppression